Nvidia's original GTX Titan benchmarked 11 years later — $1,000 card now 'barely usable' in modern titles, often beaten by AMD's sub-$200 RX 6400

How the tables have turned for Nvidia's flagship Kepler GPU.

PC Games Hardware Germany has gone back and retested Nvidia's GTX Titan, which launched over a decade ago, to see how it performs in 2024. The review outlet found that the tables have turned on the Kepler GPU, and most games made today are not compatible due to missing features. Of the few modern games that do run on what was once among the best graphics cards for gaming, only a handful are playable at ultra settings.

It's worth mentioning that the particular GPU PCGH reviewed was the original GeForce GTX Titan that was unveiled in 2013, based on Nvidia's Kepler architecture. There have been multiple iterations of the Titan throughout the years, with some sharing names with their predecessors, so it's a bit difficult to keep track of each Titan SKU. The Kepler-based GTX Titan debuted with 6GB of GDDR5 memory operating on a 384-bit bus, with 2,688 cores, 876MHz boost clock, and a 250W TDP.

Despite being Nvidia's flagship GPU from 2013, PCGH found that the Kepler GPU is a very poor gaming GPU in 2024. The biggest issue with the GPU is its age and lack of modern features. It barely qualifies as a DirectX 12 compatible GPU at all, meeting only the base specification without any extra features. It also only supports DirectX 12 through software emulation, which is less efficient than native hardware support. This is due to the fact that DirectX 12 was released two years after the GTX Titan debuted in 2015.

As a result, many populator titles today, such as Call of Duty, Guardians of the Galaxy, Forza Horizon 5, and many more wouldn't even start on the GTX Titan due to compatibility issues. That applies to all Kepler-era GPUs, incidentally — quite a few games simply refuse to run.

Of the titles that did launch, the GTX Titan could barely hit playable frame rates in most games at 1080p ultra settings. It's a big departure from 11 years ago, when the GTX Titan could smoke any game you ran on it, even at 1440p.

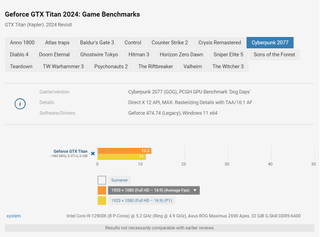

Crysis Remastered only achieved 31 FPS on the GTX Titan, and The Riftbreaker which isn't a particularly demanding game, could only hit 33 FPS. In Cyberpunk 2077, the Kepler GPU only managed 12.3 FPS, and that's without ray tracing enabled (which the GPU of course doesn't support). The only titles PCGH tested that could get near 50 FPS were Diablo 4, Ghostwire Tokyo, and Psychonauts 2. The rest either achieved around 30 FPS or well under 30 FPS.

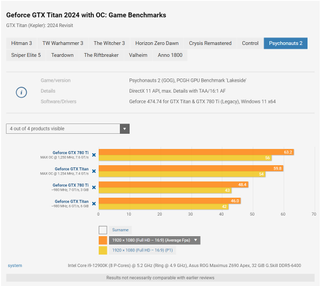

With overclocking, the story changes quite a bit. Overclocked to 1,250MHz, the GTX Titan saw a 34% performance boost overall, enabling the GPU to hit playable framerates in games that otherwise weren't. For example, in Control the GTX Titan only achieved 24.8 FPS at stock, but with overclocking it was able to run at a playable 33 FPS. Psychonauts 2 is the only game in PCGH's test suite that got to almost 60 FPS on the GTX Titan, after overclocking. Without overclocking the game ran at 46 FPS, but with overclocking the game ran at 59.8 FPS.

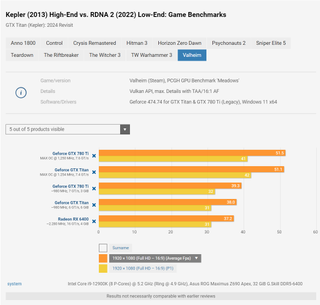

For comparison, PC Games Hardware also threw a RX 6400 into its testing suite to see what a modern-day discrete GPU can do against Nvidia's best GPU from 2013. The RX 6400 is by no means a fast GPU, but it has all the modern-day features and optimizations we've come to expect.

PCGH found that the RX 6400 beat the GTX Titan in 9 out of the 12 titles it tested, with the RX 6400 at stock clocks (it doesn't support overclocking). With the GTX Titan overclocked, the GTX Titan turned the tables beating the RX 6400 in most of the tested games.

The GTX Titan had a good run

PCGH's testing confirms that the GTX Titan is no longer a competitive gaming GPU in 2024. Its lack of DirectX12 features is the primary reason for its downfall, as well as discontinued game-ready driver support which ended in 2021. That said, if you've been holding on to your GTX Titan for the past 11 years, you've likely gotten your money's worth out of it (even at its overpriced MSRP).

Of course, you can run many games at lower graphics settings to boost FPS, but the GTX Titan is a flagship graphics card that was originally intended to provide the best gaming experience possible during its era. This is why PCGH tested the Titan at ultra settings rather than high, medium or low settings.

There are a few traits of the GTX Titan that are still decent even today. The biggest one is its VRAM buffer. 6GB's of VRAM was ludicrously overkill in 2013, to the point where people doubted if any game could use the Titan's full memory capacity. Little did they know that in 2024 that 6GB is often the minimum requirement to play games at ultra settings. PCGH reports that the Titan's 6GB's frame buffer is one of the few things that enabled it to run some games at ultra settings at playable frame rates. The 6GB capacity allowed the review outlet to max out the texture quality and shadow quality without encountering micro-stuttering problems.

Another amazing trait of the GTX Titan is its overclockablility. The Titan came out in an era when GPU overclocking was in a healthy state, and technologies such as GPU boost were in their infancy. The fact that it can gain over 30% more performance from overclocking alone is downright impressive compared to today's GPUs. A modern RTX 40-series card can barely squeeze out 10% more performance (if that) from overclocking.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Aaron Klotz is a freelance writer for Tom’s Hardware US, covering news topics related to computer hardware such as CPUs, and graphics cards.

-

bit_user But GPUs have basically stayed on the Moore's Law curve, at least until recently. So, this would be almost like comparing a 2.53 GHz Northwood Pentium 4 against a 486DX2-66.Reply

The thing that's improved least is memory bandwidth (leaving aside HBM). However, bigger & faster caches have gone a long ways towards mitigating that bottleneck. -

rluker5 Kepler was a one thing at a time arch and games eventually went more and more to concurrent compute around 2016. (also was the time that SLI compatibility started to fade away) There are still games it does well in, but the list of ones it currently does badly in dwarfs any issues Arc has.Reply

But it is nice to see a review where they are showing the architecture's full potential. A clockspeed of 1250mhz was definitely doable and reviewers generally did comparisons with the card running at less than 1 ghz. That bothered me because I was getting 60 fps in newly released W3 med-hi@4k with SLI 780tis running at 1250. It was an awesome gaming experience. I'm still using the same TV monitor.

Kepler was good for it's time. That time has passed. -

yeyibi According to Tom's Hardware GPU hierarchy, the original gtx titan is a bit weaker than a GeForce GTX 1660.Reply -

a3dstorm I own one since the early days it was released, it still works today although not installed in any system at the moment. It was the longest lasting GPU I ever owned and I've been building my own PCs since 1998. I used it on a hackintosh connected to a 60hz 1440p IPS Shimian monitor up to MACOS Mojave (so for 6 years I ran the Titan GTX and was running everything at 1440p since the first day I installed that GPU in my system, I've been enjoying 1440p gaming/daily computer use for 11 years now) and also used it on windows up to that point. I built a new system in 2019 when it became impossible to use an Nvidia GPU past the Keppler generation on MAcOS . I had not much budget for a GPU at the time of the new build so I bought a cheap RX580 that was barely better in performance than my 6 year old Titan in most games on Windows and lasted me just a couple of years when gpus became scarce and too expensive due to Bitcoin mining and I had to settle for a RX 6600 for an upgrade to the barely passable RX580. I have now decided to abandon hackintoshing altogether since it's no longer a viable option since Apple abandon Intel CPUs so my next gpu will certainly be an Nvidia again. But AMD cards have come a long way and they are pretty good if you don't need all the bells and whistles.Reply -

Dr3ams For the last 10 years my limit has been 550 Euros for a GPU. I don't see that changing in the future.Reply -

thisisaname It is called progress and 11 years of it is a lot of time to make a lot of progress.Reply

It is a shame the price has in recent years made that progress regressive in terms of price to performance. -

salgado18 If I had one, I'd replace it *only* because of missing features not running some games. Otherwise, such an old flagship running games like an entry level card today means is still is a good card. That's why I don't like the idea of frequent upgrades seen on most reviews, in the form of "it runs today's games at 60 fps, so it's good", which means you need to upgrade in two years. Get a stronger card, slightly above what you need (a 1440p card for 1080p gaming, f.ex.), and make your money more valuable.Reply -

Neilbob Reply

Yup, that 66MHz 486 would win the match-up fairly easily!bit_user said:But GPUs have basically stayed on the Moore's Law curve, at least until recently. So, this would be almost like comparing a 2.53 GHz Northwood Pentium 4 against a 486DX2-66.

-

Okay, I am of course jesting.

It would take the 100 MHz DX4 to achieve the feat.

Most Popular